Table of Contents

Context behind Google’s A2A explained

The Agent2Agent (A2A) protocol is Google’s answer to the rapidly growing ecosystem surrounding AI agents. In simplest terms, A2A enables communication between agents, allowing them to collaborate on tasks and work toward a common goal. Like the Model Context Protocol (MCP), Anthropic’s “universal remote” that standardizes how AI systems connect with tools and data, A2A aims to remove the complexity of multi-agent integration using a unified approach.

This guide explores Google’s Agent2Agent protocol, explaining how it works in beginner-friendly terms. By the end of this article, you’ll know:

What is A2A and what makes it tick

The relationship between A2A and MCP

How to get started with A2A in your own project

Let’s start by briefly reviewing the context surrounding A2A’s launch and how various AI integration approaches influenced its creation.

Context behind Google’s A2A explained

AI has undergone dramatic changes since the release of GPT-3.5 in late 2022, which marked an inflection point for AI adoption thanks to its commercial viability. As these models became more powerful and useful, developers sought ways to extend their capabilities beyond the bounds of a chat window.

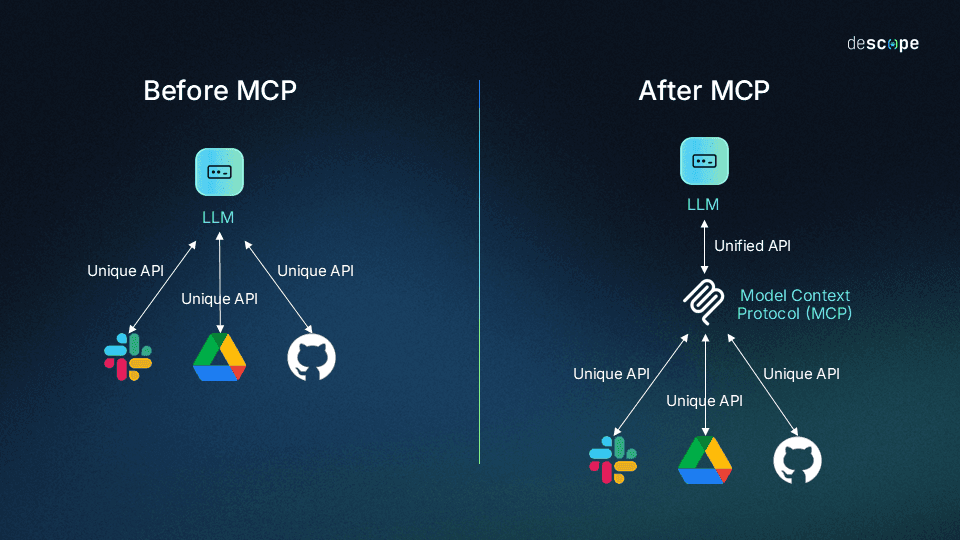

Function calling emerged as an early and obvious solution, allowing LLMs to connect on a one-to-one basis using APIs. This is how features like GPT Actions work. However, each AI vendor and implementer approached integration a little differently, creating a fragmented ecosystem where interoperability was all but absent.

MCP gained immediate traction for its potential to solve the “NxM problem,” a challenge in which the number of agents/AI systems (N) is multiplied by the number of tools/data sources (M). This results in a daunting amount of custom integration, where each combination of AI app and tool needs bespoke development to connect.

To that end, MCP serves two purposes: standardizing context (good for users) and simplifying integration (good for devs). However, Google purports that MCP doesn’t enable agents to communicate directly with each other, which is where A2A comes in.

Like MCP, A2A unifies how AI agents talk to something—in this case, other agents. It’s worth noting that the term “agent” is a bit murky: some parties split hairs between agents and “agentic AI”, while others (like Amazon) point to semi-autonomous goal fulfillment as the defining trait.

Even with some uncertainty about their exact definition, virtually all AI agents distinguish themselves from “vanilla” LLMs through specialization; one might be good at a specific scripting language, another at academic research, and so on. That’s how we’ll be defining agents from this point forward. To form “team ups” combining agentic specialties, devs built custom integrations and proprietary frameworks to bring them together. Unfortunately, these didn’t scale well or address the interoperability issues, even with MCP’s potential sampling capabilities

Google’s A2A protocol solves the problem of agentic collaboration while adding a few additional tricks to the AI developer toolkit.

What is Google’s Agent2Agent (A2A) protocol?

A2A is an open protocol that lets AI agents talk to each other, regardless of who built them or how they were built. Think of it as a translator that knows every possible language, bridging the gap between different frameworks (LangChain, AutoGen, LlamaIndex) and different vendors.

Released in early April 2025 at Google Cloud Next, A2A was built with input from more than 50 technology partners, including major players like Atlassian, Salesforce, SAP, and MongoDB. This collaborative approach means A2A isn’t just Google’s protocol—it’s a broader industry push toward standardization.

At its core, A2A treats each AI agent like a networked service with a standard interface. You can think of it like how web browsers and servers communicate using HTTP (Hypertext Transfer Protocol), but for AI agents instead of websites. In the same way that MCP solves the NxM problem, A2A simplifies plugging different agents together without writing custom code for each pairing.

Four key capabilities of A2A protocol

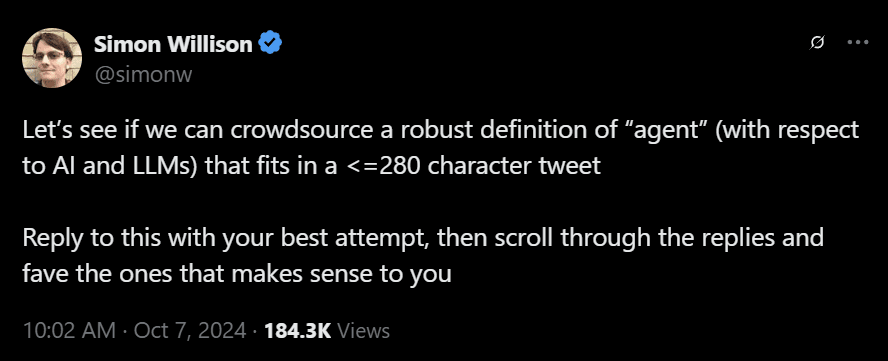

A2A is built around four central capabilities that make agent collaboration possible. To better understand what these are, you’ll need to know a few terms:

Client agent/A2A client: App or agent that consumes A2A services. This is your “main” agent, the one that puts together tasks and communicates them to other agents.

Remote agent/A2A server: Agent exposing an HTTP endpoint that uses the A2A protocol. These are the supplementary agents that handle task completion.

Capability discovery: Asks, “What can you do?” Allows capabilities to be advertised through “Agent Cards” (JSON files), helping clients to identify the best remote agent for a specific task. This is essentially a machine-readable profile that helps your client understand what services other agents can provide.

Task management: Asks, “Is everyone working together, and what’s your status?” Ensures communication between client and remote agents is aimed at task completion, with a specific task object and lifecycle. For long-running tasks, agents can communicate to stay in sync.

Collaboration: Asks, “What’s the context, reply, task output (artifacts), or user instruction?” Agents can send messages to each other to create a conversation flow that goes back and forth.

User experience negotiation: Asks, “How should I show content to the user?” Each message contains “parts” with specific content types, which means agents can negotiate the correct format and understand UI capabilities like iframes, video, web forms, and more. Agents adapt how they present information based on what the receiving agent (client) can handle.

How A2A works with AI agents

A2A follows a client-server model where agents communicate over standard web protocols like HTTP using structured JSON messages. This approach improves compatibility with existing infrastructure while standardizing agent communication.

Let’s explore how A2A accomplishes its goals by breaking down the components that make up the protocol, including the concept of “opaque” agents. Then, we’ll take a closer look at a typical interaction flow.

Core components of A2A

Agent Card: Typically hosted at a well-known URL (like /.well-known/agent.json), this JSON file describes an agent’s capabilities, skills, endpoint URL, and authentication requirements. Think of this as an agent’s machine-readable “résumé” that helps other agents determine whether to engage with it.

A2A Server: An agent that exposes HTTP endpoints that use the A2A protocol. This is the “remote agent” in A2A, which receives requests from the client agent and handles tasks. Servers advertise their capabilities via Agent Cards.

A2A Client: The app or AI system that consumes A2A services. The client constructs tasks and distributes them to the appropriate servers based on their capabilities and skills. This is the “client agent” in A2A, which orchestrates workflows with specialized servers.

Task: The central unit of work in A2A. Each task has a unique ID and progresses through defined states (like submitted, working, completed, etc.). Tasks serve as containers for the work being requested and executed.

Message: Communication turns between the client and the agent. Messages are exchanged within the context of a task and contain Parts that deliver content.

Part: The fundamental content unit with a Message or Artifact. Parts can be:

TextPart: For plain text or formatted contentFilePart: For binary data (with inline bytes or a URI reference)DataPart: For structured JSON data (like forms)

Artifact: The output generated by an agent during a task. Artifacts also contain Parts and represent the final deliverable from the server back to the client.

Concept of opaque agents

Google casually dropped the term “opaque” into their description of A2A-enabled agents, which left some scratching their heads. The term “opaque” in the context of A2A essentially means agents can collaborate on tasks without revealing their internal logic:

An agent only needs to expose what tasks it can perform, not how it performs them

Proprietary algorithms or data can remain private

Agents can be swapped out with alternative implementations as long as they support the same capabilities

Organizations can integrate third-party agents without security concerns

A2A’s approach makes it easier to build complex, multi-agent systems while maintaining high security standards and keeping trade secrets private.

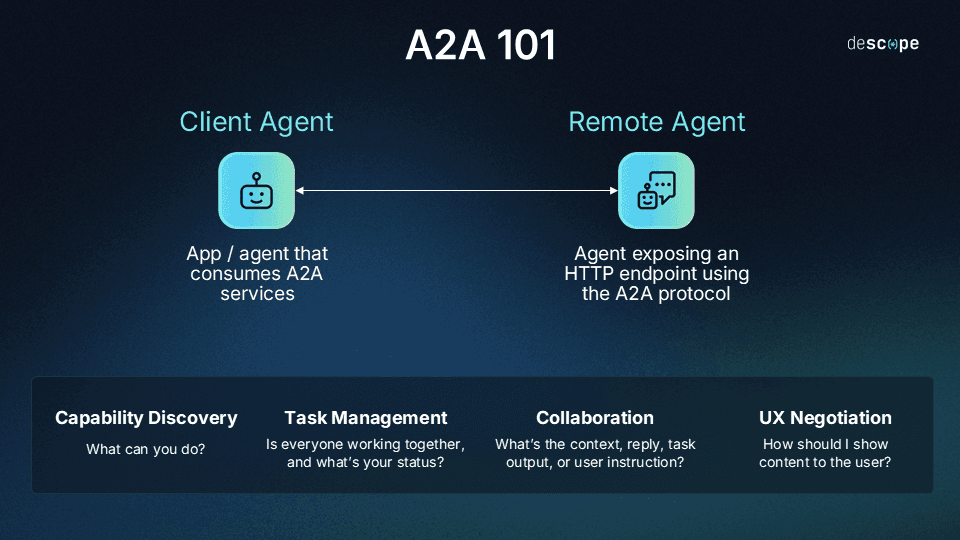

A2A interaction flow

When agents communicate via A2A, they follow a structured sequence:

Discovery Phase: Imagine a user asking their main AI agent, “Can you help me plan a business trip to Tokyo next month?” The AI they’re interacting with recognizes the need to find specialized agents for flights, hotels, and local activities. To begin, the client agent (the one they’re talking with) identifies remote agents that can help with each task. It retrieves the remote agents’ Agent Cards to learn whether they’re a good fit for the job.

Task Initiation: With the team assembled, it’s time to assign jobs. Picture the client agent saying to the agent specializing in travel booking, “Find flights to Tokyo from May 15th to the 20th.” The client sends a request to the server’s endpoint (typically a POST to /taskssend), creating a new task with a unique ID. This includes the initial message detailing what the client wants the server to do.

Processing: The booking specialist agent (server/remote agent) starts searching available flights matching the criteria. It might take one of several actions:

Complete the task immediately and return an artifact: “Here are the available flights.”

Request more information (setting the state to

input-required): “Do you prefer a specific airline?”Begin working on a long-running task (setting state to

working): “I’m comparing rates to find you the best deal.”

Multi-Turn Conversations: If more information is needed, the client and server exchange additional messages. The server might ask clarifying questions (“Are connections okay?”), and the client responds (“No, direct flights only.”), all within the context of the same task ID.

Status Updates: For tasks that take time to complete, A2A supports several notification mechanisms:

Polling: The client periodically checks the task status

Server-Sent Events (SSE): The server streams real-time updates if the client is subscribed

Push notifications: The server can POST updates to a callback URL if provided

Task Completion: When finished, the server marks the task as completed and returns an artifact containing the results. Alternatively, it might mark the task as failed if it encountered problems, or canceled if the task was terminated.

Throughout this process, the main agent might simultaneously work with other specialist agents: a hotel whiz, a local transportation guru, an activity mastermind. The main agent will create an itinerary by combining all these results into a comprehensive travel plan, then present it to the user.

In short, A2A doesn’t simply let AI agents chat about a shared task. It empowers many agents to contribute and collaborate toward the same goal, while a client agent assembles a result greater than the sum of its parts.

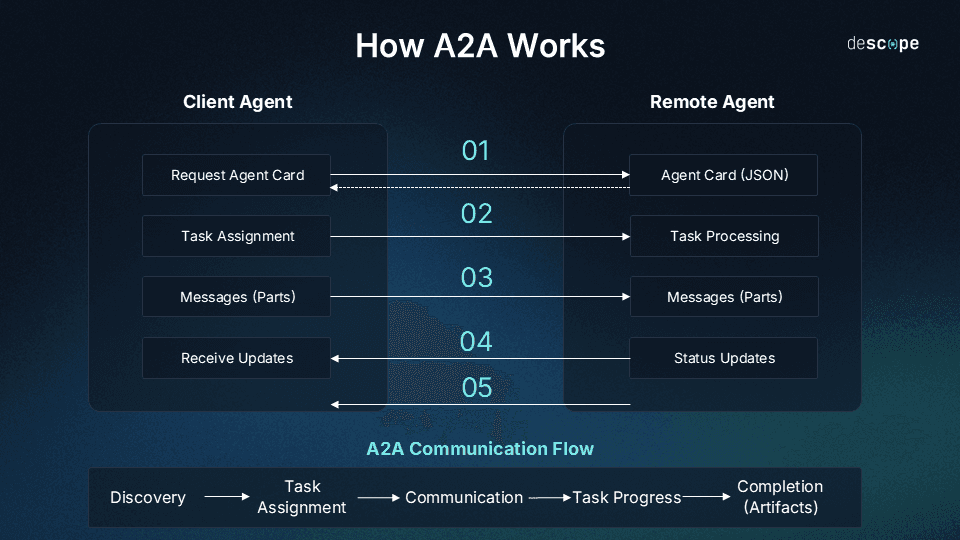

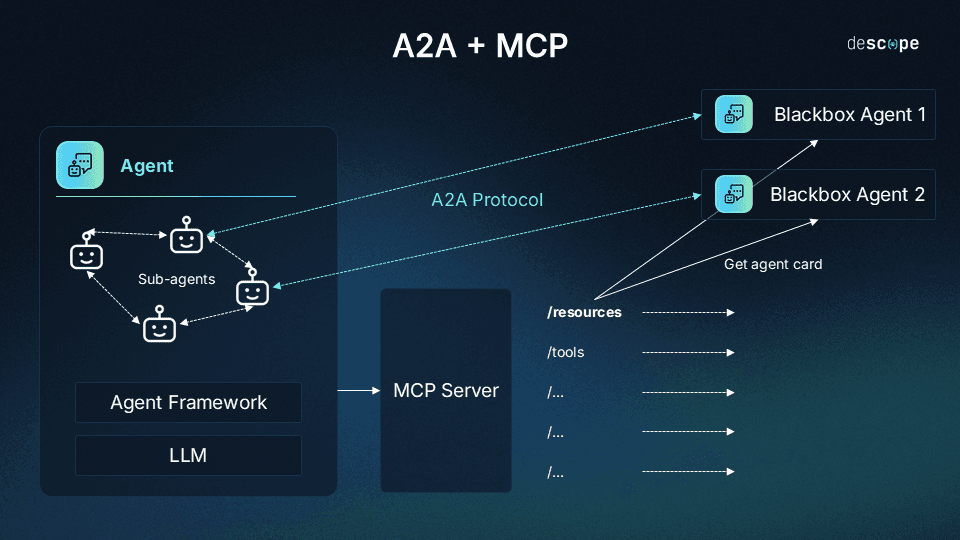

A2A vs. MCP: Complementary, not competitive

Even though A2A and MCP might seem like they’re vying for the same space, they’re actually designed to work together. They address different (and complementary) aspects of AI integration.

MCP connects LLMs (or agents) to tools and data sources (vertical integration)

A2A connects agents to other agents (horizontal integration)

Google deliberately positioned A2A as complementary to MCP. To emphasize this pluralistic design philosophy, Google launched their Vertex AI agent builder with built-in MCP support on the same day A2A released. While this certainly helps Google get their technology in front of more devs, this is far from a marketing-centric move; it makes perfect technical sense.

Here’s a useful analogy to illustrate that point: If MCP is what enables agents to use tools, then A2A is their conversation while they work. MCP equips individual agents with capabilities, while A2A helps them coordinate those capabilities as a team.

In a comprehensive setup, an agent might use MCP to pull information from a database, then use A2A to pass that information to another agent for analysis. The two protocols can work together to create a more complete solution for complex tasks, while simplifying the development hurdles we’ve faced since LLMs first became mainstream.

A2A security standards

A2A was built with enterprise security in mind. Apart from the exclusive use of opaque agents, each Agent Card specifies what authentication method clients must use (API keys, OAuth, etc.), and all communications are designed to occur over HTTPS. This means organizations can establish their own policies about which agents can talk to each other and what data they can share.

Like the MCP specification for authorization, A2A leverages existing web security standards rather than inventing new modalities, making it immediately compatible with current identity systems. Since all interactions occur through well-defined endpoints, observability becomes a straightforward affair: plug in your preferred monitoring tools and get a unified audit trail.

A2A ecosystem and adoption

The protocol launched with impressive backing from over 50 technology partners, many of whom either currently support or intend to support A2A with their own agents. As previously mentioned, Google has integrated A2A into its Vertex AI platform and ADK, making it the simplest entry point for developers already in the Google Cloud ecosystem.

Organizations considering A2A implementation should take these points into consideration:

Reduced integration cost: Instead of building custom (or pay for proprietary) code with each agent pairing, developers can implement A2A universally.

Relatively recent release: A2A is still in its early stages of wide release, meaning it’s yet to receive the kind of real-world testing that reveals shortcomings at scale.

Futureproofing: Because A2A is an open protocol, new and old agents alike can plug into its ecosystem without extra effort.

Agent limitations: While A2A could be a giant leap forward for truly autonomous AI, it’s still task-oriented and doesn’t operate fully independently.

Vendor agnosticism: A2A doesn’t lock your organization into any specific model, framework, or vendor, meaning you’re free to mix and match across the entire AI landscape.

The future of the Agent2Agent protocol

Looking ahead, we can expect further improvement to A2A, as outlined in the protocol’s roadmap. Planned enhancements include:

Formalized authorization schemes and optional credentials directly within Agent Cards

Dynamic UX negotiation within ongoing tasks (like adding audio/video mid-conversation)

Improved streaming performance and push notification mechanics

But perhaps the most interesting long-term possibility is that A2A will become for agent development what HTTP was for web communication: a spark that lights an explosion of innovation. As adoption grows, we might see pre-packaged “teams” of agents specialized for particular industries, and eventually, a seamless global network of AI agents that your client can tap into.

For developers and organizations exploring AI implementation, now is the perfect time to learn and build. Together, A2A and MCP are the beginning of a more standardized, secure, and enterprise-ready approach to AI.

For more protocol deep dives and developer news from the world of identity, subscribe to our blog or follow us on LinkedIn.