Table of Contents

The evolution of MCP and authentication

The Model Context Protocol (MCP) is an emerging open standard that allows AI systems (like LLMs) to interact with external tools and data in a standardized way. Early MCP deployments ran the MCP server and client together in a local and controlled environment, meaning there was no need for complex authorization.

As organizations begin to deploy MCP servers remotely, the need to better secure sensitive data and operations has become urgent. To answer this need, the MCP specification introduced a new authorization component based on OAuth 2.1 with the goal of leveraging battle-tested standards to protect MCP endpoints.

This guide covers the core concepts of MCP authorization and explains how those elements are sometimes misapplied or misunderstood.

We’ll cover:

Why OAuth-style authorization matters for MCP

Requirements MCP places on implementers

Current challenges being discussed in the community

Insights into open questions about MCP

The evolution of MCP and authentication

Originally, MCP clients could talk to MCP servers directly through stdio (often on the same machine), so authentication was minimal. As the MCP ecosystem evolved, the need arose to call MCP servers across the network (e.g. Streamable HTTP), such as by third-party applications on behalf of users.

For example, a user might run an MCP client application that needs to access their data on a remote MCP server in a data center. The user (resource owner) must grant the client access to the server, ideally without handing out passwords or API keys. This is exactly the problem OAuth solves—delegated authorization.

Because modern organizations have existing identity providers (IdPs) or OAuth2 authorization servers, the maintainers of MCP chose to piggyback on OAuth 2.1 rather than creating a new scheme. This means an MCP server can trust tokens issued via an OAuth flow, and MCP clients can obtain those tokens by redirecting users to an authorization server.

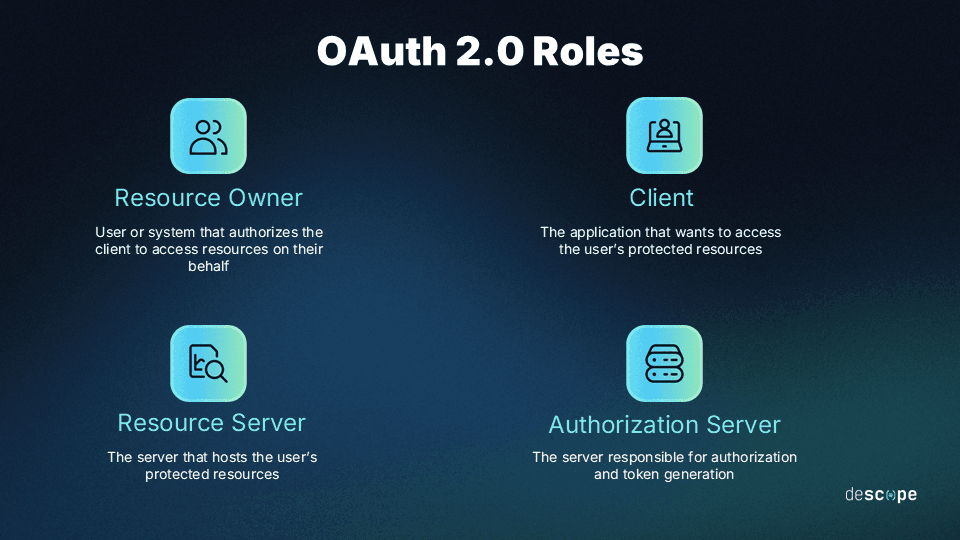

Familiar OAuth roles can now enter the MCP picture: the MCP server acts as the resource server, the OAuth IdP is the authorization server, and the MCP client is the OAuth client requesting access on behalf of the user. However, as we’ll see, the current MCP specification leaves the final implementation of the concepts up to interpretation.

Fortunately, the June 2025 revision represents a major upgrade of the specification, addressing earlier concerns (especially for enterprise) by clearly separating the MCP server (resource server) from the authorization server role.

Need a refresher on OAuth? Read our beginner-friendly guide.

MCP authorization requirements at a glance

At the time of writing, MCP Authorization Specification establishes a framework based on OAuth 2.1 to secure interactions between MCP clients and servers:

Requirement | Recommendation status | Description |

|---|---|---|

OAuth 2.1 | MUST | Implement OAuth 2.1; PKCE mandatory for authorization code flows with public clients |

Dynamic Client Registration (DCR) | SHOULD | Support RFC 7591 to allow clients to programmatically register with the authorization server |

Authorization Server Metadata (ASM) | SHOULD (servers); MUST (clients) | Implement RFC 8414 discovery to expose auth server endpoints and capabilities |

Resource Indicators | MUST | Support RFC 8707; MCP servers must validate tokens were issued specifically for them, rejecting tokens without them in the audience claim |

Protected Resource Metadata (PRM) | MUST | MCP clients must use OAuth 2.0 Protected Resource Metadata for authorization server discovery |

Note that previously the specification called for fallback default endpoints at /authorize, /token, and /register. The June 2025 revision removed this mechanism in favor of mandatory RFC 9728 (Protected Resource Metadata).

Why these components matter

OAuth 2.1: Provides a standardized security framework with mandatory PKCE to protect against authorization code interception attacks. Since most MCP clients are public (like CLI tools or apps), PKCE is always required.

Dynamic Client Registration: DCR allows MCP clients to obtain credentials (a client ID and possibly secrets) at runtime rather than requiring manual pre-registration. While MCP’s maintainers encourage this, not all IdPs support it, and it often requires initial access tokens or admin privileges. Thus, many enterprises may bypass this by pre-registering trusted clients.

Authorization Server Metadata: ASM allows MCP clients to discover authentication endpoints automatically. Without this discovery mechanism, developers must hardcode endpoint locations, complicating interoperability. When ASM is implemented an MCP client discovers the authorization server location via the Protected Resource Metadata document, then queries the authorization server's .well-known/oauth-authorization-server endpoint. The authorization server metadata is hosted by the IdP, not the MCP server.

Protected Resource Metadata: MCP servers MUST implement RFC 9728, which describes OAuth 2.0 Protected Resource Metadata (PRM). When authorization is required, servers return HTTP 401 with a WWW-Authenticate header containing the resource_metadata URL pointing to the PRM document. This document includes the authorization_servers field indicating where clients should obtain tokens. It can define multiple authorization servers, but the responsibility for selecting which one to use lies with the MCP client.

Implementation challenges and community discussions

The MCP specification’s approach to authorization has sparked active community debate. The June 2025 revision successfully addressed the biggest concern by clearly separating the MCP server (resource server) from the authorization server. The MCP server now simply validates tokens issued by external authorization servers (e.g., enterprise IdPs). However, implementation challenges remain.

Key technical challenges

SDK integration issues: SDKs and reference implementations often assume MCP servers are also the authorization server, making third-party integration trickier.

Token lifecycle management: Spec mandates complex token mapping and tracking when using third-party authorization, which significantly increases the implementation burden.

Connection protocol limitations: SSE (Server-Side Events) endpoints lack clear conventions for handling insufficient scopes or token expiry during active connections.

These challenges have led to proposals for decoupling authorization concerns and making token mapping optional, which could simplify deployment.

Best practices for MCP authorization implementation

With so much developer feedback, several MCP implementation best practices are emerging. These both aim to simplify deployment while accounting for potential vulnerabilities in the current specification.

Separate authorization servers from resource servers

The June 2025 revision now mandates this separation. MCP servers act as OAuth 2.1 resource servers only, validating tokens issued by external, dedicated authorization server. This aligns with enterprise architectures where security is centralized. The MCP server’s job is to validate tokens and enforce RBAC/permissions internally, but not to manage user logins or token issuance. Keeping MCP servers stateless (or at least not storing OAuth state) improves scalability and lowers maintenance overhead.

Prevent token passthrough

When MCP servers need to call upstream APIs, they must act as OAuth clients to those services and obtain separate tokens. Never pass through the token received from the MCP client, as this creates confused deputy vulnerabilities. This is a scenario where downstream services may incorrectly trust tokens not intended for them. The June 2025 spec explicitly prohibits MCP servers from passing through tokens to upstream APIs.

Point MCP clients to an external authorization server

If you have an existing IdP (Identity Provider), configure your MCP server to advertise that issuer’s metadata rather than hosting its own. This can be done by populating the .well-known/oauth-authorization-server response or responding with a WWW-Authenticate: Bearer … header that contains the IdP’s discovery/documentation URL. This helps the MCP client to know exactly where to perform the OAuth exchange securely.

Implement function-level scopes for better AX

MCP authorization should optimize for Agent Experience (AX)—minimizing unnecessary friction for agents, and not bombarding humans with scope-related prompts. MCP tool calls may not map one-to-one with APIs, so scopes should be validated at the tool or function level. On top of scope validation for all routes at the middleware level, the request object can be parsed to determine the tool call or resource involved, checking for specific scopes (e.g. a view scope for viewing a document).

While recent revisions to the MCP authorization spec resolve the most pressing issues, enterprises face additional deployment challenges beyond the most glaring obstacles. These include multi-tenancy complexities, auditing capabilities, and single sign-on (SSO). For a complete analysis of enterprise MCP deployment challenges, see 5 Enterprise Challenges in Deploying Remote MCP Servers.

Open questions and recommendations

The following open questions are drawn from outstanding issues the MCP spec has yet to fully resolve. While we can only speculate as to the eventual shape the protocol’s authorization requirements will take, understanding its current state can provide much-needed insight.

How clients establish trust

When it comes to DCR, knowing that the information you get about a client is trustworthy remains paramount for MCP server owners. While it's not part of the official specification, SEP-991 proposes OAuth Client ID Metadata Documents as a mechanism for establishing trust when clients and servers have no pre-existing relationship. This would allow servers to verify client identities through domain-hosted metadata rather than relying solely on anonymous DCR.

Server-side authorization

In an effort to simplify client implementations and eliminate DCR requirements, SEP-1299 proposes moving OAuth flow management from MCP clients to MCP servers. By using HTTP Message Signatures for session binding, resource servers could leverage existing authorization modalities and MCP servers could manage multiple authorization contexts. The PR promises "a more secure, authenticated connection between the client and the server," but is not an official part of the spec (yet).

Scope discovery and AX

How should an MCP client know what scopes to request during the OAuth flow? The specification doesn’t define scopes—implementors must decide. A fixed scope like access_mcp may suffice in some cases, and the ASM can list scopes_supported, though without indicating which scopes are needed for a specific action. Scopes not lining up with agent intent can lead to AX friction, but future revisions will ideally minimize back-and-forth by including scope recommendations in WWW-Authenticate errors.

DCR security

A key future consideration is how secure and standardized Dynamic Client Registration should be. The current OAuth specification allows for flexibility, but this can open the door to client impersonation or unverified registrations. This becomes particularly important for federated environments or public tools, where the authorization server needs to assert trust before issuing credentials.

Production implementations require additional hardening beyond base RFC 7591. Anonymous DCR creates legitimate security concerns, especially for enterprise. These risks include difficulty monitoring, auditing, and mitigating potential attacks. Organizations need verification flows, IP reputation checks, and risk-based controls to safely implement DCR at scale.

For detailed guidance on implementing production-ready and scalable DCR, see our guide on Tips to Harden OAuth Dynamic Client Registration in MCP Servers.

Handling 403 over SSE connections

SSE streams complicate error handling. If a token lacks necessary scopes:

The server could filter out unauthorized events, or

terminate the stream with an error (e.g., 403/401).

Since SSE uses a persistent stream post-200 OK, there's no built-in way to signal errors mid-connection. A custom event could be used, but isn’t standard. For now, scopes should be enforced on initial connection or request at the HTTP level rather than SSE.

Universal JWT validation via JWKs

Ideally, an MCP server accepts JWTs directly from a trusted IdP, verifies them via JWKs, and authorizes based on included claims or scopes—without issuing its own token. This requires:

IdP-issued JWTs to include necessary claims.

MCP server to validate signatures via

jwks_uri.

This stateless approach avoids the need for token mapping and call-backs to the IdP. However, the specification currently leans toward requiring MCP-issued tokens.

Exploring MCP authorization approaches

As MCP matures from a local developer tool into a remote-first protocol for secure, cross-system AI integration, its authorization model must evolve accordingly. The June 2025 revision represents a significant step forward in aligning MCP with enterprise needs.

Looking ahead, the community continues to refine client registration approaches through proposals like SEP-991 (Client ID Metadata Documents) and SEP-1299 (Server-Side Authorization Management), which both aim to address the remaining friction points that impede enterprise adoption.

The good news is that the broader OAuth ecosystem provides a wealth of battle-tested solutions. By strictly treating MCP servers as resource servers, reusing external identity providers, and embracing modern standards, teams can reduce implementation complexity and avoid reinventing the wheel.

However, while the specification provides the foundation, production deployment requires going beyond the basics.

How Descope simplifies MCP authorization

Implementing the latest MCP authorization specification for production environments takes expertise, knowledge, and patience. Typical implementations mean navigating complex OAuth flows, spending cycles to harden your DCR against novel threats, and managing tokens in niche ways. Challenges like these can (understandably) overwhelm even the most experienced enterprise identity teams.

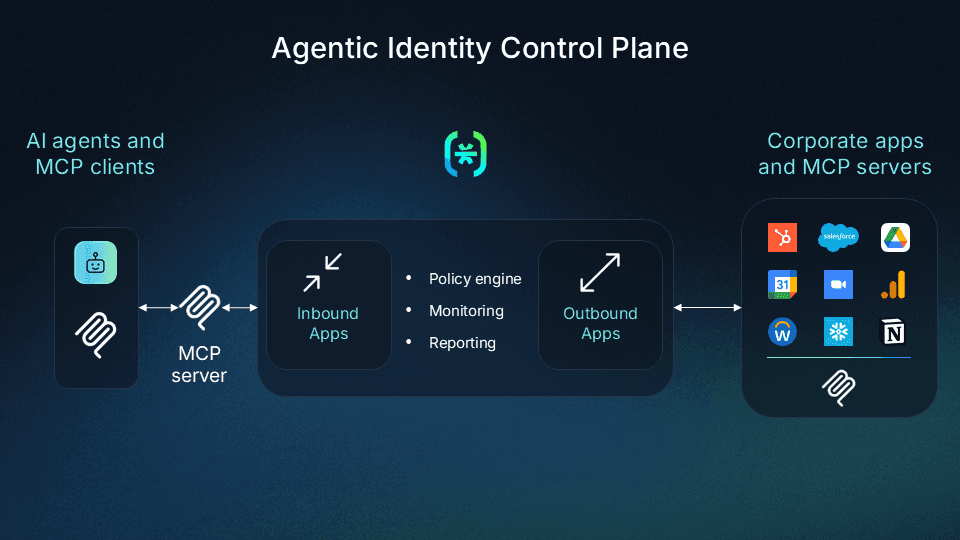

Descope provides MCP Auth SDKs that help organizations add OAuth authorization, PKCE, DCR, and other authorization capabilities required by the MCP specification in three lines of code. For organizations building internal MCP servers, the Agentic Identity Control Plane provides unparalleled control, traceability, and policy-based governance. Descope provides RFC 7591-compliant DCR with enterprise-grade hardening built in: verification flows, IP reputation checks, threat intelligence integration, and risk-based auth without the hassle.

Whether you're building your first MCP server from scratch or scaling a project to production, Descope eliminates auth complexity so you can focus on building the next big AI experience.

Explore Descope's AI-focused demos or start building now with a Free Forever account.